Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

0:02

They're only 20, they're only 21. I

0:04

think they've done a pretty good job.

0:06

Well you think they have, well done.

0:08

You're saying well done from crew. I'm

0:12

not saying well done. From, here's your little golf clap.

0:14

Nice one. Nicely done you're saying.

0:26

Smashing Security Episode 386 The $230 Million

0:28

Crypto Handbag Heist and

0:34

misinformation on social media with Carol

0:36

Terrio and Graham Clouley. Hello, hello

0:38

and welcome to Smashing Security Episode

0:40

386. My

0:42

name is Graham Clouley. And I'm

0:44

Carol Terrio. Another late

0:46

night recording for me. Maybe

0:49

for you, depending on where you are in the world. I'll

0:52

be back in the office soon. It's a mystery.

0:54

Nobody knows, but you'll be back in the

0:56

same time zone soon. Very soon. Now

1:01

we have a packed show, but

1:03

before we kick off, let's thank

1:05

this week's wonderful sponsors, One Password,

1:07

Vanta and Centennial One. Now coming

1:09

up on today's show, Graham, what

1:11

do you got? I'm

1:13

going to be talking about cryptocurrency and handbags.

1:17

And I'm going to look at the

1:19

glut of disinformation on the socials and

1:21

what can we do about it. All

1:23

this and much more coming up

1:25

on this episode of Smashing Security.

1:32

Now, chums, chums, today I've

1:35

got a fascinating story for

1:37

you. OK. About a

1:39

couple of young fellas who

1:42

have been arrested and charged in

1:44

the United States of America. OK.

1:48

So this is the story of 20

1:50

year old Malone Lam is

1:53

one of these fellows of Miami

1:55

and Jean Diehl Serrano, 21 of

1:57

Los Angeles. in

2:00

their early 20s. So very young

2:02

adults. Very young. And oh,

2:04

by the way, some of this story is only possible to

2:06

tell because of some

2:08

extraordinary investigative work done by

2:11

Zach XBT. Are you familiar

2:13

with Zach XBT? No.

2:16

Hi, Zach. Hi, Zach.

2:18

If you're listening, Zach is a

2:20

crypto investigator. You can follow on

2:22

Twitter over 600,000 people are following

2:25

Zach XBT on

2:28

Twitter because his investigations are

2:30

very interesting. He's well known

2:33

for exposing scams and hacks

2:35

and unethical practices in

2:37

the crazy world of cryptocurrency. Cool.

2:40

And he regularly uses his expertise

2:42

with the old blockchain analysis to

2:44

track down funds that may have

2:46

been stolen and identify people behind

2:49

crypto crimes. Okay, so he's a

2:51

cool guy to have on your

2:53

side if you find yourself at

2:56

the sharp end of a cryptocurrency scam. If

2:59

you want to know who's got your money and

3:01

maybe how to get it back, you would call

3:03

in someone like Zach XBT. Okay,

3:06

yeah, I'm following you. Good. All

3:08

right. Now in the last few

3:10

days, we've seen a press release

3:12

by the US authorities about the

3:14

arrests and charges against Malone Lamb

3:16

and Jean Diehl Serrano. And

3:18

they are accused of

3:21

stealing and laundering some

3:23

cryptocurrency. Now, what

3:25

makes this case unusual is

3:28

the amount of cryptocurrency because I think

3:30

you would agree with me that stealing

3:33

$50 million

3:36

worth of cryptocurrency. That's

3:38

a lot of WONGA. Would be a lot. Two

3:40

young guys who are mostly interested in, I don't

3:43

know, Lynx after shave and I

3:48

don't know. Well, 50 million would be a

3:50

lot, wouldn't you? What about almost one quarter

3:52

of a billion dollars? One quarter of a

3:54

billion dollars of

3:59

cryptocurrency? correct in surmising that's

4:01

250 million dollars.

4:03

So it was roundabout I think it's

4:05

230 or 240 million

4:08

dollars. Okay how did they do this?

4:10

How did they do this? Do you

4:12

know? I want to hear. Well hang

4:14

on it's not just the amount of

4:16

money that they are accused of stealing

4:18

okay the fact that they are accused

4:20

of stealing it from

4:23

one person. Oh

4:25

there is a guy in Washington DC

4:27

right now with a hole the size

4:30

of a quarter of a billion dollars

4:32

in his crypto currency. I shouldn't laugh.

4:35

I imagine he's rather

4:37

depressed. He's lost a quarter

4:39

of a billion dollars unless

4:42

you're you know Jeff Bezos or something.

4:44

Yeah it's gonna it's gonna hurt it's

4:46

gonna hurt I suspect. Yeah

4:49

I don't think there's a band-aid big

4:51

enough you know. Now according to Zach

4:53

XBT this crypto investigator the hackers were

4:57

remarkably inept because

5:01

not only did they fail to cover the

5:03

tracks of this

5:05

alleged hack by the way insert lots of

5:07

alleged and things during the rest of this

5:10

is all allegations all allegations right hasn't gone

5:12

through the court system yet but not only

5:14

did they fail to cover their tracks which

5:17

is why the arrests have happened

5:19

so quickly because this breach of

5:21

this crypto currency one it only

5:23

happened a month ago right but

5:26

it appears the hackers also documented

5:28

their crimes making

5:30

it easy for the feds to build

5:32

a case against them in fact they

5:35

didn't just document their crimes

5:37

they actually recorded the entire

5:40

heist in a movie. What?

5:43

They recorded that they recorded

5:45

themselves talking on a

5:47

discord channel and you can see

5:49

them typing to each other you

5:51

can hear them celebrating the theft

5:54

of a quarter of a billion

5:57

dollars near enough. Oh

6:00

my god! Oh my god! Don't

6:03

throw them in spaz out! You!

6:05

We're done! We're done! Who

6:07

spazzed out? So

6:10

they were so confident at how

6:12

they're ruse, they

6:14

thought, let's just record it online. What, are

6:17

they wearing little masks and stuff? So we

6:19

can't tell who they are and all this?

6:21

You don't see them on the screen. You

6:23

just see their conversation. You just see their

6:25

screens. Exactly. You hear them talking. You see

6:27

their conversation going. Right. And you hear their

6:29

whoops of delight. And I think they

6:32

were possibly a little bit astonished as to how much

6:34

money they were grabbing. They

6:36

maybe weren't expecting it. I'm just wondering, that

6:38

might give away a lot of information is

6:40

that you have a real time effort going

6:42

on Discord at the same time that 250

6:44

million dollars goes whoop. Yeah,

6:48

because they're texting each other. They're incriminating themselves.

6:50

And so occasionally when they're moving their windows

6:52

and things on the screen, there may be

6:54

other pieces of information which are revealed, which

6:56

may indicate their true identities, their use and

6:59

their use. They're all me 20. They're all

7:01

me 21. I think they've

7:03

done a pretty good job. Like, I don't know.

7:05

What do you think they have? Well done. I'm

7:10

not saying well done. From this little golf clap.

7:12

Nice one. Nicely done, you're saying.

7:16

I'm just surprised they got as far as they

7:18

did. Whatever. I just was

7:20

an idiot at that age. So, me

7:22

perhaps I'm projecting. Yes,

7:24

they've somehow incriminated themselves. They were discussing how

7:27

they were going to launder the funds. It's

7:29

like they've got all this money now. Because

7:31

that's the thing, Kroll, right? I don't know

7:33

if you've ever had 240 odd million dollars

7:35

in your pocket. No.

7:39

Let me tell you, it's not that

7:41

simple having money. Because

7:43

how are you going to spend it? It's

7:46

a pain. It's burning a hole in your pocket, isn't

7:48

it? It's like, well, what can I do with this?

7:50

What are you going to buy with it? Are you

7:52

just going to buy pizza? What are you going to

7:54

do? Is this really

7:56

a problem that people face? I think

7:59

it is a problem. Friends. of the

8:01

show Jeff White, he's written a book

8:03

all about how you launder money and

8:05

rinse money which you've grabbed through cybercrime.

8:07

It's complicated to do. And of course

8:09

it can be complicated to follow the

8:11

leads as well. So they were talking

8:13

about how they're going to launder the

8:15

funds. They even taunted cryptocurrency investigator Zach

8:17

XBT. Oh, by name. I

8:20

guess they were thinking, you know, he's going

8:22

to be after us and We're going to

8:24

just flip in the V's. Yeah.

8:27

And they failed to understand what was going to

8:29

become of them. So reports suggest that

8:31

the heist began on August the 19th

8:33

of this year. So just about a

8:35

month ago. And from what

8:37

I've read, it looks like these

8:40

men, allegedly, allegedly contacted

8:43

their intended victim by posing as

8:45

Google support, they used a spoofed

8:47

telephone number, they tricked the victim

8:49

into sharing their screen. One

8:51

thing they did was they rang up at

8:53

one point claiming to be from the cryptocurrency

8:56

exchange. And they said, you know,

8:58

there's been a breach of your account, we

9:00

need to be careful, we need to confirm

9:02

your identity. Can you share the last four

9:04

digits of your private key?

9:06

Don't send us the whole private

9:09

key, they said. Oh, wow. The

9:11

last four digits. Now, it's clever.

9:13

It's clever. This girl, it's so

9:15

clever. Because that's what you do

9:17

with credit cards, right? Well, that is what

9:19

you do with credit cards and bank cards.

9:21

Yeah. But the last four digits alone weren't

9:24

going to allow these scammers to access the

9:26

account, right? And it's not as though they

9:28

had the rest of the private key. But

9:30

what they said, they've raised their chances

9:33

quite a bit. They have. But listen

9:35

to this. Listen to this. Okay, they said to this

9:37

victim was, don't worry, they

9:39

said, can you take a photograph of the

9:41

private key and crop

9:43

the picture. So we only see

9:46

the last four digits. Oh my

9:48

god, they had already

9:50

compromised this guy's computer. So

9:53

they could see his screen. So

9:55

when he cropped it in Microsoft

9:58

Paint or whatever he was using. scene,

10:01

they saw all the key. Jesus.

10:03

Oh my God, it's kind of clever. See

10:05

2021 just saying. They

10:10

also duped him into resetting

10:12

the multi-factor authentication, protecting his

10:14

wallet and so they were able to transfer

10:17

the funds allegedly. I'm not thinking this guy

10:19

was a complete idiot if you know he

10:21

allegedly fell for all this, but

10:27

it's scary. Like you think

10:29

you'd be really careful with that amount of

10:31

money, right? You'd of course, and of course

10:33

you're panicking. Well, exactly, because you think Google

10:35

have run you up, you think the crypto

10:38

company has contacted you. And they've worked hard

10:40

on their little pitter-patter to convince you pretty

10:42

quickly. So

10:44

what we've got here is a couple of

10:46

dweebs with 230

10:49

million dollars in their

10:51

pockets. What are they going to do with it? It's like

10:53

I said, what are they going to do with it? Well,

10:55

girls, girls, girls, exactly. No,

10:58

what they really, really don't have

11:00

in their life are

11:02

friends, right? They don't have

11:05

much in the way of friends. They

11:07

certainly don't have girlfriends. So

11:10

these guys apparently were allegedly

11:12

spending five hundred thousand dollars

11:14

a night at

11:17

nightclubs. They were

11:19

buying hundreds and hundreds of bottles of champagne. It's

11:21

like, hey, hey, we're having a party. Well, I'm

11:23

going to buy everybody in the club a

11:26

bottle of champagne. They

11:28

were going up to random girls and

11:30

they hired people to hold up placards

11:33

saying, do you want a free designer

11:35

handbag? And all these women

11:37

who were interested in designer handbags were

11:40

being approached or would trot over to

11:43

these guys and say, yeah, we'd like one.

11:46

And they would give them a luxury handbag in the hope

11:48

that they would go out with them. One

11:50

of them was sent a message by one of these

11:52

guys and he said, I've got

11:55

you a present. We'll call it an early

11:57

birthday gift. A thank you gift. I appreciate

11:59

you. you so much. Okay. So

12:01

you're wondering what was the present? Hmm.

12:04

A bright pink Lamborghini

12:07

car. Subtle, subtle. Classy.

12:12

Very classy. You know, has gravitas.

12:15

Gave it to complete stranger in the

12:18

hope that she'd go out with him.

12:20

Her response was, I've already got a

12:22

boyfriend. I'm not interested. Thanks

12:25

a lot for your big car. But yeah,

12:27

thanks. Another woman

12:29

who received a designer handbag is

12:31

a food blogger and podcast star.

12:33

I found her on TikTok. Her

12:35

name is Skyla Harrison. Me

12:38

and my two girlfriends walk over to the section and

12:40

this kid, I'm gonna refer to him as like a

12:42

kid. I mean, he was definitely over the age of

12:44

18 or 21, hopefully, because he was at

12:47

the club. But he looked pretty

12:49

young. He comes towards me and he's

12:51

like, I got this for you. And he

12:54

hands me the box, he opens

12:56

it and he's like, Do you like it? And

12:58

I was like, Yeah, I do. But like, is

13:00

it real? And he was like, Of course, it's

13:03

real. Like, it's for you. You can have it

13:05

and just walks away. Anyways, while I'm

13:07

at the club, I see like one other

13:09

it's like a light pink one, I think.

13:11

And then the day after so like yesterday,

13:13

I think I saw a girl post a

13:15

TikTok about how she got gifted one by

13:18

the same guy, same club. Hers, I think

13:20

was like lime green. But yeah, that was

13:22

it. Like he literally just walked away, he

13:24

handed it to me. This

13:27

is it. It's beautiful. But to be

13:29

honest, it's not really my style. Wow.

13:33

So that's Skyla. Again,

13:36

she declined to go out with five of

13:38

these guys, as far as we know, they

13:40

didn't manage to get any girlfriends. So if

13:42

you're currently trying to amass a multimillion fortune,

13:45

if you're spending all your time building your

13:47

dotcom company or engaged in cryptocurrency scams, or

13:49

whatever it may be that you're doing out

13:51

there, folks, don't imagine that once you have

13:54

all this money, you're actually going to succeed

13:56

in getting yourself a girlfriend. It doesn't always

13:58

work. Not only that, It's a guarantee

14:00

that you're not going to go under the

14:02

radar. Right. Exactly. Crow,

14:14

what's your story for us this week? Okay,

14:16

so last week, Pew, the

14:18

research group, they published

14:20

a report that said

14:22

basically more than half of US adults,

14:25

so 54%, occasionally

14:27

get our news from social

14:29

media. Right. And this, they

14:31

say, is up slightly compared to the

14:33

last few years. And research group Statista

14:35

also report that Americans are using social

14:37

media as a source of news, with 38%

14:40

of adults in the US currently using social

14:42

media for information about the 2024 presidential

14:45

election. Yeah. So,

14:47

conservatively, I think you

14:49

and I can say that two out of every

14:51

five people in the US, their viewpoints are

14:53

being slightly formed, at least in part, by reading

14:56

the socials. And

14:58

I suspect it's probably higher than that. I mean,

15:00

every person that you run into and says, oh,

15:02

did you read blah, blah, blah, blah, blah? And

15:05

then it's in your memory bank. And the chances are

15:07

it came from social media. And

15:09

I wouldn't, I would argue probably not many of us are, you

15:11

know, are very good at saying, where did you get your sources?

15:13

Send me all the details. I

15:15

think a lot of news breaks on social media,

15:18

and it is where a lot of people hang

15:20

out. You're more likely to get your news, I

15:22

suspect, from social media these days, than tune

15:24

into the nightly news at nine o'clock.

15:28

How much time do you think the

15:30

average American spends on social

15:32

media platforms a day? A

15:36

day? A day. It's

15:38

going to be less than 25 hours a day. I

15:42

can be fairly confident of that. Okay,

15:46

let's narrow it down. Okay,

15:48

so let's assume that people

15:50

sleep for eight hours a day, and

15:53

then mostly not on social media

15:55

then. So that gives us 16

15:57

hours remaining. I'm going to say...

16:00

Mmm eight hours a

16:02

day. You're such a ridiculous person Why

16:06

the average American the the answer

16:08

is two hours and 14 minutes

16:10

a day. Okay, so two hours.

16:12

Sorry. Sorry All right,

16:14

two hours a day not eight. It's not a

16:16

full-time job It's a part-time job two hours and

16:18

14 minutes a day. I think

16:20

people are on longer than that I think I

16:22

mean, oh I would agree This is probably what

16:25

people say they are because you know what I've

16:27

seen a lot of people these

16:29

days I think they're actually making TV programs

16:31

of this in mind now I've

16:33

noticed a lot of people know when they watch

16:35

TV that they are dual screening. They're

16:37

looking at the phone while they're watching TV Yeah,

16:41

I'm not cool enough to do that. But

16:43

yeah, a lot of my younger buddies do

16:45

that constantly here. Yeah Yeah, exactly. So I'm

16:48

thinking you know, this is and

16:50

I mean, okay you get it socials as we know are

16:52

designed Intrinsically to

16:54

be checked all the time. They're difficult to

16:56

look away from because there's always something interesting

16:59

popping up around the corner Yeah, and I

17:01

mean what else you gonna do while you're

17:03

you know commuting to work or having a

17:05

coffee or you know Let's be honest a

17:08

poo I

17:10

wrote that and then and then I thought actually I

17:12

wonder if chat GPT wants to get in on this

17:14

So I asked it what percentage of people admit to

17:16

using the phone while on the toilet? And

17:19

it wrote quote the percentage seems to typically fall in

17:21

the range of 75 to 90 percent Depending

17:25

on the demographic and how the question

17:27

is phrased. It's a common behavior across

17:30

various age groups Don't you think it's

17:32

time we started using Wi-Fi? repellent

17:36

paint in lavatories

17:39

interesting Interesting. It's a signal

17:41

in there. That'd be a great idea. Wouldn't

17:43

it? I think it's kind of ironic that

17:45

we're filling our heads with poop from the

17:48

socials as we literally evacuate our bodies Now

17:52

who might you think

17:54

are the head? Social media

17:56

honchos when it comes to people going

17:58

to them for their news fix So

18:00

like who's the numero uno news

18:02

fix social site? According

18:05

to Pew. I'm

18:07

going to say TikTok. Twitter.

18:12

I didn't say TikTok, I said Twitter.

18:14

I said Facebook. And it's way

18:17

ahead. It's way ahead. Where do people

18:19

go to get their news? This

18:22

is probably because you're not thinking of it as a

18:24

social media platform. YouTube.

18:27

Yep. YouTube. Yeah.

18:30

Highest usage amongst US adults with 83% using the platform. Can

18:35

you guess how long people play

18:37

on YouTube every day? You're

18:40

going to say something like eight hours. No, it's 46 minutes.

18:44

Yeah, there's a maximum of two hours. So okay.

18:47

Yeah. And the next after that is Facebook.

18:49

Seven out of 10 say they use

18:51

it and spend on average 30 minutes a day

18:53

or 31 minutes a day on Facebook. Okay. 46

18:56

plus 31. Let me see what's left over. We know.

18:59

If we've been listening to this show that many

19:01

nasty things, a lyric on these social sites, right?

19:03

So the deep fakes, my new

19:06

word, rom Conners, rom

19:08

Conners, romance cons. Did you come up

19:10

with that? It's not mine. It's

19:12

not mine. No, no, no. I

19:14

stole it, but I love it. Crypto

19:16

nonsense, like misinformation campaigns, disinformation campaigns, poison

19:18

ads, all the stuff. All

19:21

of these things are for us like you

19:23

and me, the average Joe and Josie's out

19:25

there. And our job is to

19:27

slalom through every time we use these sites to

19:29

get our news fix and hope that we're not

19:31

hitting something bad.

19:34

Now some experts place the blame. I'm

19:36

interested in your view on this, right?

19:39

On the fundamentals, like how the social

19:41

media platforms actually work. So

19:43

typically these sites reward you if

19:45

you have more followers, more

19:47

likes, more shares, you know, people want to hear

19:50

what you're saying. And

19:52

to build up this following, you

19:54

don't tend to push out moderate

19:56

viewpoints, right? They don't get

19:58

the eyeballs, the shares. the likes. But you

20:00

certainly don't get the same ones that comments

20:02

that are more extreme in viewpoint might. Do

20:05

you agree with that? Yeah, I do agree

20:07

with that. In my particular world, the thing

20:09

I'm obviously fascinated with, well, one of my

20:11

interests is Doctor Who. And there

20:13

are, there,

20:16

it's a very fractious community. There are people

20:18

who aren't very happy with Doctor Who, or

20:20

maybe some of the decisions made by the

20:23

production team in the last few years. And

20:26

those people, who maybe are

20:28

against certain things happening in Doctor

20:30

Who, get all of the eyeballs.

20:32

And it feels like people are

20:34

deliberately making videos being outraged and

20:37

angry. And, you know, they're

20:40

really right on the edge in terms of

20:42

opinions compared to the average sort of laid

20:44

back fan. And I

20:46

suspect they're doing it because they make more

20:48

money, because they get more views, which means

20:50

that it's feeding into them. And so that

20:53

they are having to churn out more and

20:55

more outrage and shocked and astonished videos, because

20:57

that is what actually works with the algorithm

20:59

and gets some more views and makes them

21:01

more money. I think you're absolutely right. While

21:04

I was researching this story, I

21:06

found a CBS interview with a

21:09

guy called Chris Bale. He's the

21:11

founder of Duke University's Polarization Lab.

21:13

Right. And he says the incentive

21:15

structure on social media platforms leads

21:18

to more extreme content rising to

21:20

the top, right, as algorithms promote

21:22

what gets high engagements, reactions, comments

21:24

and shares. I wonder,

21:26

do you know which tweet, for example, I

21:29

know you're a twatterer or a tweet or

21:31

whatever, an exer. Do

21:35

you know what your most successful treat was and would

21:37

you share it with us? I

21:39

don't know if the top of my head I

21:41

could probably. Oh, no, actually, I'm not allowed access

21:43

to Twitter analytics anymore, because Elon Musk makes me

21:46

pretend to be a business and give him thousands of

21:48

pounds to find out. So

21:51

I don't know, I'm afraid. No. All

21:53

right. OK. But this

21:55

Polarization Lab founder also had this to say,

21:58

which I found interesting. So he said, says,

22:00

quote, when we look at people who

22:02

are highly politically active on Twitter, we

22:05

find that about 70% of the content

22:07

about politics is generated by just 6% of the

22:10

people. And those 6% are

22:13

people disproportionately very liberal or very

22:15

conservative. And

22:17

so when we wander onto social media,

22:20

we can wrongly conclude that everyone has

22:22

quite strong and extreme views. And

22:26

like that everyone is sort of out to get everybody

22:28

else. And that may not be the case. Yeah,

22:30

yeah, totally. Now there

22:33

are many efforts out there trying to

22:35

figure out how to control this beast

22:37

called misinformation. One, ZDNet wrote about, which

22:40

I have really interesting article. This

22:42

is about the Coalition for Content

22:44

Provenance and Authenticity, the C2PA. This is

22:47

led by Linux, the Linux Foundation. And

22:50

it's basically an open standards body

22:52

looking into embedding metadata or watermarks

22:54

into images, videos, and audio files.

22:57

And the specification makes it possible to

22:59

track and verify the origin, creation, and

23:02

modification of the digital content. Now,

23:04

loads of big dudes are in there.

23:06

Google, Microsoft, Meta, OpenAI, they all contribute.

23:09

TikTok also joined apparently the first

23:11

social media platform to implement content

23:13

credentials apparently. But

23:15

notably Apple and X are

23:18

not on board as yet. And my question

23:20

is why are they hesitating? There

23:24

are studies on soft moderation techniques. Have you heard of these? Soft

23:26

moderation. Is that where you leave it, leave

23:29

it to the other users to moderate themselves

23:31

rather than hiring people to do

23:33

it? One, the study that I saw, it's about footnotes, warning

23:35

labels, and blurring filters were examined. Oh

23:39

yeah. And anyway, they claim of this paper, it's

23:41

linked in the show notes, right? They say

23:44

that both interventions are reduced engagements with post

23:46

containing false information with

23:48

no significant difference between the two. So that's

23:50

interesting. It is interesting. I've

23:53

seen people leave community notes in the

23:55

past on some of the Elon Musk's own tweets. where

24:00

they've gone, that's not actually true

24:02

what you've posted there or what

24:04

you've retweeted to your millions

24:06

and millions of followers. You do worry

24:08

though that some people won't pay any attention to those

24:10

notes. And they are of course posted

24:13

up later. They're not there at the

24:15

time of the initial publication of the

24:17

post or the tweet they follow later

24:19

on. And I mean,

24:21

we even saw California this week, you

24:23

know, in an effort to calm political

24:26

misinformation now requires social media companies to

24:28

moderate the spread of election related deep

24:30

fakes. So basically the world

24:32

over it seems people are grappling with

24:34

how to get the misinformation genie back

24:36

in the bottle. But you

24:38

know, were they to ask me, I've

24:41

got a good idea. Have they not asked you? No, they haven't

24:43

not. Well, they might have. I haven't read all my email. But

24:48

here's my idea, right? Okay, but it takes all of us

24:50

for this to work. And I'm hoping you're going to be

24:52

on board, Cleo. Of course, I

24:54

will be. Okay, so I'm using the premise, if

24:56

we want social media companies to do better, we

24:58

need to hit them where it hurts. And that's

25:00

the wallet, right? So I

25:02

suggest that we all take a first step and

25:05

fight for no social

25:09

media on the loop. Okay,

25:11

so you have to come up with a cute little

25:13

hashtag for this. But instead of filling your head with

25:15

nonsense from socials while you're enjoying your private time, perhaps

25:17

instead, you know, delete unwanted photos, review

25:19

your security settings, or, you

25:21

know, just go old school and read the ingredients on

25:24

your shampoo bottle. Because, I mean,

25:27

seriously, this will make an impact. We spend about

25:29

10 to 30 minutes a day, apparently

25:31

on the bog. That's 10,000 minutes

25:34

a year. And I think

25:36

I think we're speaking with our wallets, by which

25:38

I mean, yeah, our butts, which

25:41

you know, seems apropos when we talk about

25:43

social media. So I've just asked AI to

25:45

come up with some. I don't

25:47

know if it's going to be any good, right? Leave

25:50

the scrolling for the toilet paper.

25:53

Give your thumbs a break. They deserve it. That's

25:55

what you do with your thumbs. Oh, I

25:58

see. Okay, don't let your

26:00

Phone drown in the porcelain sea. Oh,

26:02

yeah, poetic. Keep your

26:05

private business private. I don't, I didn't

26:07

mean, when I asked the question, I

26:09

didn't mean taking photos. Flush your worries,

26:11

not your feed. All right. Sorry,

26:14

AI is just rubbish, isn't it? Any listeners

26:16

out there can beat AI. We're

26:19

dying to hear from you. Thank you very much.

26:21

That's my story for this week. Support

26:23

for today's podcast comes

26:26

from Sentinel One, which

26:28

secures and protects every

26:30

aspect of your cloud

26:33

in real time. Discover

26:35

all your assets and deploy AI

26:38

powered protection to shield your cloud

26:40

from build time to runtime. On

26:43

top of that, Sentinel One offers

26:45

threat hunting, visibility and remote administration

26:47

tools to manage and protect any

26:49

IoT devices connected to your network.

26:52

Looking for a cloud native application protection

26:54

platform? Sentinel One

26:56

is your ultimate CNAAP solution.

26:59

Go to smashingsecurity.com/Sentinel One for

27:01

more information and a free

27:03

demo. See what a

27:05

flexible, cost effective and resilient cloud

27:08

security platform can do for your

27:10

organization with Sentinel One. That's

27:13

smashingsecurity.com/Sentinel One. Question,

27:21

do your end users always,

27:23

and I mean always without

27:25

exception, work on company owned

27:27

devices and IT approved apps?

27:30

I didn't think so. So my next

27:32

question is, how do you keep your

27:34

company's data safe when it's sitting on

27:37

all of those unmanaged apps and devices?

27:40

Well, One Password has an answer

27:42

to this question, and it's called

27:44

extended access management. One

27:46

Password extended access management helps

27:48

you secure every

27:50

signing for every app on

27:53

every device because it solves

27:55

the problems traditional IAM and

27:58

MDM can't touch. Go

28:01

and check it out

28:03

for yourself at 1password.com/smashing.

28:05

That's 1password.com/smashing and thanks

28:07

to the folks at

28:09

1Password for supporting the

28:11

show. Whether

28:16

you're starting or scaling your

28:18

company's security program, demonstrating top-notch

28:20

security practices and establishing trust

28:23

is more important than ever. Vantar

28:25

automates compliance for SOC 2,

28:28

ISO 27001 and more, saving

28:30

you time and money while

28:32

helping you build customer trust.

28:35

Plus, you can streamline security

28:37

reviews by automating questionnaires and

28:39

demonstrating your security posture with

28:42

a customer-facing trust center all

28:44

powered by Vantar AI. Over

28:47

7,000 global companies like

28:49

Atlassian, Flow Health and Quora use

28:52

Vantar to manage risk and prove

28:54

security in real time. Get

28:57

$1,000 off Vantar when

28:59

you go to vantar.com/smashing.

29:01

That's vantar.com/smashing for $1,000

29:04

off. And

29:09

welcome back and you join us at our favorite part of the show, the part of the

29:11

show that we like to call Pick of the Week. Pick of the Week. Pick

29:18

of the Week is the part of the show where everyone

29:20

chooses to stand their like. It could be a funny story,

29:22

a book that they've read, a TV show, a movie, a

29:24

record, a podcast, a website or an app. Whatever

29:27

they wish. It doesn't have to be security related

29:29

necessarily. Better not be. Well,

29:33

my Pick of the Week this week is not

29:35

security related. Good. My Pick of the Week this

29:37

week is a YouTube

29:39

video. During my

29:41

eight hours on YouTube today, I stumbled

29:44

across a

29:47

video made by a Norwegian, a

29:50

Norwegian called Lassie Jertsen.

29:53

And he made this video way back in 2005,

29:55

19 years ago. Before

30:00

the iPhone existed, can you imagine what life

30:02

was like in 2005? Yes,

30:05

I remember. What a different

30:07

world it was. Was it a better world? Was

30:10

that a Blackberry time? Yes,

30:12

I think it was when phones had keyboards.

30:15

So this video, I think actually millions

30:17

of people have seen it, but I saw it for the

30:19

first time today. It's called

30:22

Hyperactive, and

30:24

it is this guy, that's a

30:26

Jürzsen, performing

30:28

as a human beatbox. Now

30:30

we've seen beatbox videos before,

30:33

right? Yes. In

30:37

this particular case, he's doing all that, but what

30:39

he's done is he's edited it, and

30:41

it must have taken him a long time, I'm sure,

30:43

editing this darn thing. But he's

30:45

edited this together. So it's

30:47

just him looking at the camera with lots

30:50

and lots of cuts, and for having done

30:52

this in 2005 in his bedroom, I

30:54

think it's pretty impressive, and that's why what

30:57

you can hear right now is him doing

30:59

his beatboxing, but you've really got to see

31:01

it. It's a bit like Max Headroom or

31:03

something like that. Have

31:05

you seen this, Karel? No, I haven't.

31:07

I will, I will. I'm dying

31:10

to see it. So it's called Hyperactive. We'll

31:12

all watch it together, listeners. He looks a

31:14

bit like Yahoo serious. Beautiful.

31:18

Yeah, you can imagine. Apparently, this video

31:20

was so successful, it resulted in him

31:22

getting offers from companies like Chevrolet and

31:24

MTV to make videos for them.

31:27

But he apparently, though, he publicly said,

31:29

no, no, no, no, I'm not doing

31:31

that. I'm denouncing the whole concept of

31:33

advertising. It is below

31:35

prostitution, he said. So

31:38

he refused all the offers. Good for

31:40

him, I suppose. I don't know if he's monetized his

31:42

YouTube account. I bet he's kicking himself if he hasn't,

31:44

because he's now had about 15 million views. Anyway,

31:47

very, very entertaining. That is my pick

31:49

of the week. Very

31:52

cool. Karel, what's

31:54

your pick of the week? OK, so

31:56

I've been spending a lot of my

31:59

time hanging out with people that like

32:01

games. Like, I mean, board games and

32:03

puzzles and cards and Sudoku and

32:05

killer Sudoku and all this kind of stuff. Yeah.

32:08

And, you know, I like cards. I'm not really into

32:10

the other stuff. And so

32:12

I convinced my counterparts that we

32:15

could learn cribbage. Oh, have

32:17

you ever played cribbage? Not for many,

32:19

many years. That's the one where you have these

32:21

little matchsticks. There's lots of holes on like a...

32:24

Yes. A long,

32:26

thin wooden sort

32:28

of block, isn't it, where you have to move bits? Yeah.

32:31

Yeah, I haven't done it for years. So, invented in

32:33

the 17th century by Sir John Suckling,

32:35

had to say that name, an English

32:38

poet, playwright and card enthusiast. Right.

32:42

And basically the game, as you said, has a special

32:44

wooden board with pegs to track the points up to

32:46

121. First one

32:48

to get all the points wins. And I won't

32:50

say it was easy to pick up because I've never played

32:52

cribbage in my life. Right. And so,

32:55

obviously it took me a week of study, about an

32:57

hour or two a day. But still, that's a significant

32:59

amount of time to learn a game. What?

33:02

You had to... Well, hang on. Oh, yeah.

33:05

Hours every day to study it before you could play? Well,

33:07

you can play, but just to understand the

33:09

strategy of like, what do you discard and

33:11

what do you do and how do you

33:13

actually do well at the game? So now

33:15

you're a cribbage master, is what you're saying?

33:18

No, but I'm kind of addicted. I'm kind

33:20

of addicted. It's great fun. You can really

33:22

nerd out. I've downloaded

33:24

a few cribbage apps like to play around

33:26

with, but I'm not at the point of

33:28

recommending any of those yet. However, you

33:30

can noodle about on the cribbage

33:32

JD website, link in the show

33:35

notes. You can play as a

33:37

guest and you can figure out the rules from that,

33:39

although I do suggest, you know, watching a few, you

33:42

know, tutorials on the tubes first and

33:45

then probably watch them again and again to try and get

33:47

the mass in your head. But

33:50

it gets a huge enthusiastic thumbs up from me. I

33:53

even bought my beloved Yeti, who doesn't listen to this

33:55

show, a cribbage board, but

33:57

in the style of a wooden canoe.

33:59

new, and it's called The

34:01

Paddler's Cribbage, and I got it from LL

34:04

Bean, and I totally love it, and link

34:06

the show notes to that too. But don't

34:08

tell him. Oh, that sounds lovely. No, we

34:10

won't tell him. Nobody

34:12

who knows the Yeti, tell him. He's

34:15

not listening to podcasts, but we don't want to ruin the surprise.

34:18

Yeah, that's right. Well that just about

34:20

wraps up the show for this week. You can

34:22

follow us on Twitter at Smash Insecurity. No G.

34:24

Twitter wouldn't last, have a G. And don't forget

34:27

to ensure you never miss another episode. Follow

34:29

Smash Insecurity and your favorite podcast episodes,

34:32

such as Apple Podcasts and Pocket Cast.

34:34

And thank you to our episode sponsors,

34:36

1Password, Fanta, and SentinelOne, and of course

34:38

to our wonderful Patreon community. It's thanks

34:40

to them all that this show is

34:43

free. For episode show notes, sponsorship info,

34:45

guest lists, and the entire back catalogue

34:47

of more than 385

34:50

episodes, check out smashingsecurity.com. Until

34:52

next time, cheerio. Bye-bye. Bye.

34:58

Beautiful.

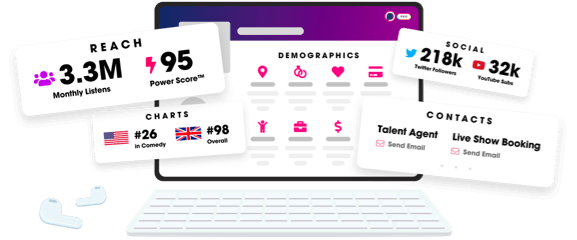

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us